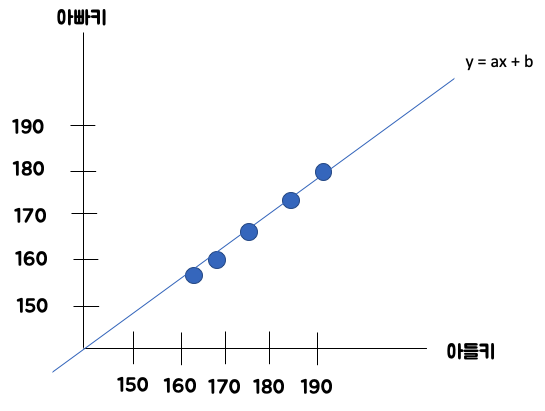

저희가 예전에 수학을 배울 때 저 선을 y = ax + b라고 했었는데

저희는 지금 이 선을 Wx + b라고 하겠습니다.

import tensorflow as tf

print(tf.__version__)

# 텐서플로우 버전 확인

# 만약 1.x가 나오면 아래의 코드 실행

# !pip uninstall tensorflow --yes

# !pip install tensorflow==2.0.0

import numpy as np

rng = np.random

# 파라메터 설정

learning_rate = 0.01

training_steps = 1000

display_step = 50

# 학습

X = np.array([1,2,3,4])

Y = np.array([1,2,3,4])

n_samples = X.shape[0]

# W b 설정

W = tf.Variable(rng.randn(), name="weight")

b = tf.Variable(rng.randn(), name="bias")

# Linear regression (Wx + b).

def linear_regression(x):

return W * x + b

# 선에 주황색 줄들

def mean_square(y_pred, y_true):

return tf.reduce_sum(tf.pow(y_pred-y_true, 2)) / (2 * n_samples)

# 경사하강법 적용

optimizer = tf.optimizers.SGD(learning_rate)

# optimizer

def run_optimization():

# Wrap computation inside a GradientTape for automatic differentiation.

with tf.GradientTape() as g:

pred = linear_regression(X)

loss = mean_square(pred, Y)

gradients = g.gradient(loss, [W, b])

optimizer.apply_gradients(zip(gradients, [W, b]))

# 학습 gogo

for step in range(1, training_steps + 1):

# Run the optimization to update W and b values.

run_optimization()

if step % display_step == 0:

pred = linear_regression(X)

loss = mean_square(pred, Y)

print("step: %i, loss: %f, W: %f, b: %f" % (step, loss, W.numpy(), b.numpy()))

import matplotlib.pyplot as plt

# 시각화

plt.plot(X, Y, 'ro', label='Original data')

plt.plot(X, np.array(W * X + b), label='Fitted line')

plt.legend()

plt.show()

# 코드참조

# https://github.com/aymericdamien/TensorFlow-Examples/blob/master/tensorflow_v2/notebooks/2_BasicModels/linear_regression.ipynb

# 텐서플로우 버전 바꾸기

# !pip uninstall tensorflow --yes

# !pip install tensorflow==2.0.0

import tensorflow as tf

print(tf.__version__)

import numpy as np

x_train = [1,2,3,4]

y_train = [1.5,3,4.5,6]

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Dense(1, input_dim = 1))

sgd = tf.keras.optimizers.SGD(lr=0.01)

model.compile(loss="mean_squared_error", optimizer=sgd)

model.fit(x_train, y_train, epochs=1000)

print(model.predict(np.array([4])))

# 코드참조

# https://www.youtube.com/watch?v=YT2Uy5x3gaA

'인프런 - 강의 > 나도 만들어본다 AI 앱 (tensorflow+android)' 카테고리의 다른 글

| 7 - 간단한 딥러닝 구현 (0) | 2020.03.05 |

|---|---|

| 6 - 그러면 deep러닝은 뭔가요? (0) | 2020.03.03 |

| 4 - 정말 간단한 Linear regression 이론 (0) | 2020.03.03 |

| 3 - AI란 무엇인가? (0) | 2020.03.03 |

| 2 - 개발환경 세팅하기 (0) | 2020.03.03 |